Over spring break, the Helmholtz paper [PDF] has finished. (Posted now on “On Helmholtz free energy for finite abstract simplicial complexes”.) As I will have little time during the rest of the semester, it got thrown out now. It is an interesting story, relating to one of the greatest scientist, Hermann von Helmholtz (1821-1894). It is probably one of the major ideas in the 19th century to move from energy extremization to free energy extremization. Informally, one throws the system under consideration into a heat bath which through randomization smooths out the difficulties of the Hamiltonian dynamics or the complexity of the rest. This fits well applications as one has an experiment which is in some thermal contact with the rest of the world or parts of the physics over which one has less control about. Since the rest of the world can be insane complexity, one idealizes the situation by adding an entropy term to the energy functional of the system under consideration. Mathematically, in the case of Hamiltonian systems, it is the transition from the microcanonical ensemble to the macrocanonical ensemble. This step can most of the time not be justified mathematically (strictly speaking, it is even wrong as KAM theory shows, a typical mechanical system is almost never ergodic, the ergodic hypothesis is most of the time wrong) but from a practical point of view, it is an enormous simplification. Instead of varying the energy and so the energy surface,one can now play with a temperature parameter. In some sense, what statistical mechanics does, is to allow other mathematical tools (and especially the language of probability theory) to shed light onto the problem, and also to ignore the nitty gritty difficulties in the underlying Hamiltonian system. It is a stochastic regularization. In the simplest case, when we have a finite space and energies Ek are attached to the points, then the natural measure is the Gibbs measure with weights proportional to exp(-Kk β). Usually, statistical mechanical write-ups start with the Gibbs measure and don’t mention that it is obtained as a variational problem of minimizing the free energy. The idea of adding an entropy is also relevant in cosmology, where the entire universe is the laboratory. Why do physisists still think about heat baths and add entropy to the mix? Because the laboratory is so large that an energy equilibrium can not be reached. A part of the universe like our solar system is always showered by waves from very distant parts which are not part of the local laboratory.

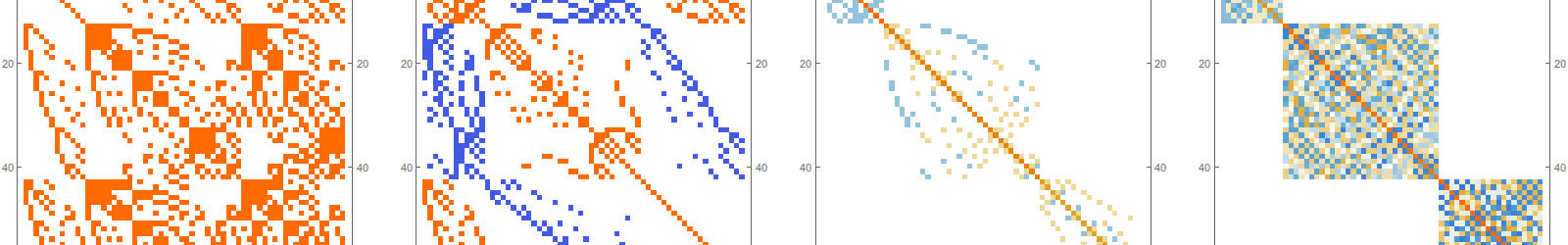

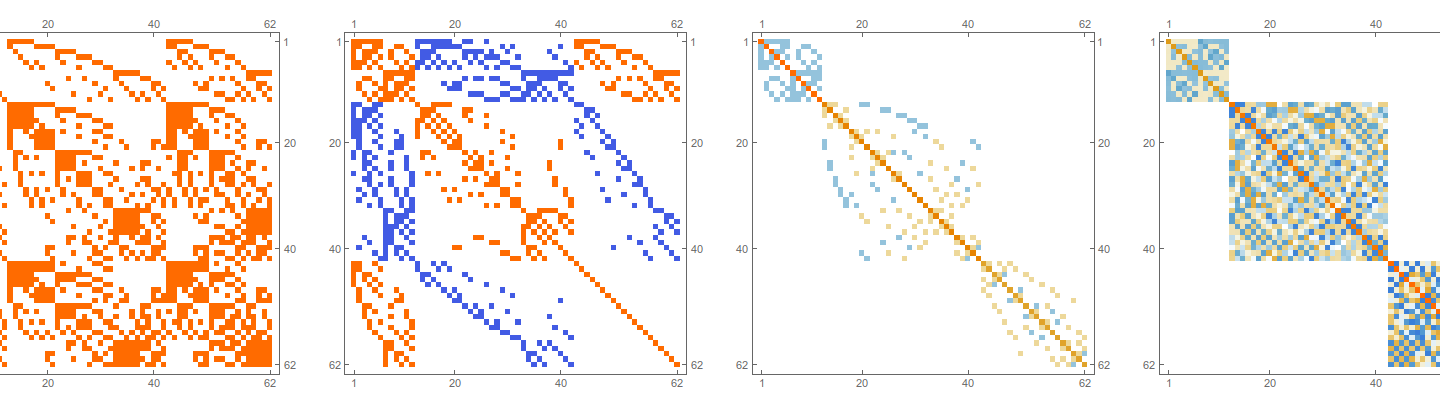

Why is this relevant when studying abstract finite simplicial complexes G? The reason is that there is a natural internal potential theoretic energy associated to a complex. This all became possible because the connection Laplacian L’ of a complex is a matrix which is always invertible. The Green functions g(x,y) are integers. Now, as potential theory shows, every Laplacian defines a potential Vx centered at x. It is just Vx(y) = g(x,y). The potential of a simplex x in the complex is then the sum . In the paper we prove a new Gauss-Bonnet formula which shows that this is actually a curvature. When adding this over the entire network, we get the theorem

| χ(G) = Σx,y g(x,y) |

It shows that Euler characteristic is a potential energy of the geometry. We have seen that earlier in an other context as there were some affinities with an average sectional curvature, leading to affinities with the Hilbert action. But how do we formulate the problem of minimizing the energy? It is an interesting combinatorial problem to study this on Erdös-Rényi spaces but becomes difficult already for a dozen nodes or so. Now, there is an other possibility and this is motivated by quantum mechanics, where a wave ψ defines a probability measure p=|ψ|2 on the space it moves. The idea is that rather than changing the space, we vary over the probability measure which is in the case of a finite simplicial complex a nice variational problem, if we take the potential energy U(p) = ∑x,y g(x,y) p(x) p(y). This definition of energy of a measure is classical potential theory. An important case is when the space is the complex plane and L is the usual Laplacian, where g(x,y) = log|x-y| and the energy is the logarithmic energy of a measure. In potential theory one can then look for example at measures which minimize the energy on a compact subset which is not a trivial task as we have a singularity at x=y. The energy of a finite point configuration for example is minus infinity. Sets for which this happen are called polar sets in complex analysis. So, if we believe that the connection Laplacian is fundamental, then the corresponding energy is fundamental. Why do we think that the connection Laplacian is fundamental? Because of the remarkable fact that independently of the simplicial complex G, the potential energy values are all bounded away from 0! This is the content of the unimodularity theorem. Well, the connection Laplacian is not associated to an exterior derivative d like the Hodge Laplacian but it complements it nicely and has a nice regularity property allowing us to define a nice internal energy for a complex. Now the above theorem shows that if we take the measure p which is uniform (and so an equilibrium measure of entropy), then we get something topological, at least after rescaling depending on the size of the network. Nothing like that appears to happen for the Hodge Laplacian. We can regularize the inverse of the Hodge Laplacian by disregarding the zero eigenvalues but then we appear also to filtere away the nice topological content in these Laplacians as Hodge theory deals with the kernels. It is not excluded yet that some more topology can be found but we could not get one yet. For the connection Laplacian, there is a topological connection through the above theorem and that tells something because Euler characteristic, as it is a pretty unique functional in topology.

Having seen that also entropy has a pretty unique characterization (see here), why not combine the potential energy and the entropy as Helmholtz did?

In some sense, we throw the simplicial complex into a heat bath with some temperature and look for the measures which minimize the free energy. Now this has turned out to be really exciting because interesting bifurcation phenomena appear. We see even catastrophes. Now, catastrophe theory is a bit out of fashion (maybe because it is too easy) but it is nice teaching material for single variable calculus as seen here [PDF]. So, it turns out that we don’t have to do any thermodynamic limit but already that for the finite dimensional variational problem on a finite simplicial complex and so finite probability space, we see interesting behavior. While for high temperature, the measures are all nice and unique an change continuously with the temperature parameter, for low temperature, bifurcations can happen. The minimal free energy can change discontinuously. I always mention the Gibbs free energy example in Lagrange multivariable setups and there one can see that for temperature T=0, when the heat bath is absent, there is a unique minimum. But this minimum is only unique if the support of the measure p is the entire space. What happens if we throw the simplicial complex into the heat bath and cool it down is that we end up with a measure which is in general supported on a smaller part of the network. In some sense, the heat bath treatment has solved the problem to minimize the Euler characteristic. It could be a numerical scheme to find simplicial complexes which minimize the discrete Hilbert action and so serve as Einstein manifolds. Of course, this has still to be investigated. But in any way, it was fun this week to throw the geometry into the heat bath and the change the temperature to see the bifurcations happen.

It is maybe no accident that Oscar Lanford appeared again and again in this project. First in the form of the Bowen-Lanford zeta function, then in the hyperbolicity of the unit spheres in the Barycentric refinement of a simplicial complex, then in the context of measures minimizing the free energy (related very loosely to Dobrushin-Lanford-Ruelle measures) then through bifurcation topics (which I learned in two dimensional systems courses taught by Lanford). It might not surprise if the bifurcation patterns (Oscar Lanford was the first to give a proof of Feigenbaum universality) would become universal in the Barycentric limit.

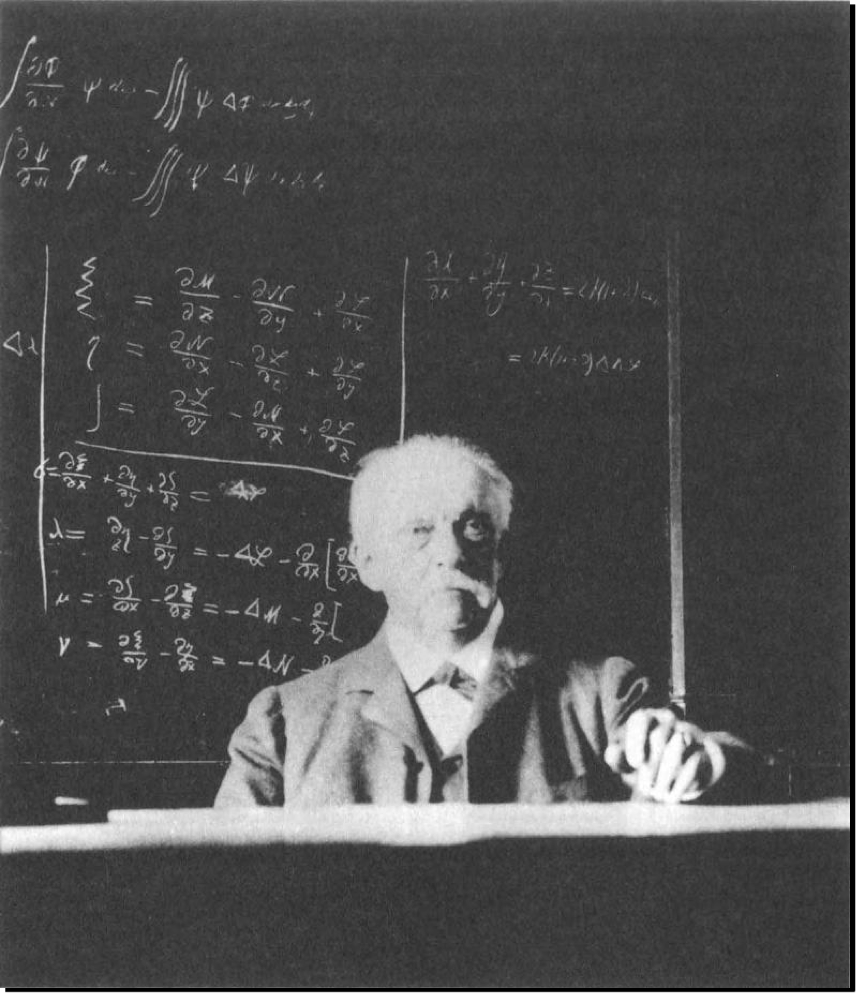

Finally, here is a picture of Hermann von Helmholtz taken on February 1894 in Berlin. Helmholtz died later that year. The picture is from the book “Schriften zur Erkenntnislehre” containing annotated papers of Helmholtz.

Here is a nicer picture in color showing Helmholtz at work:

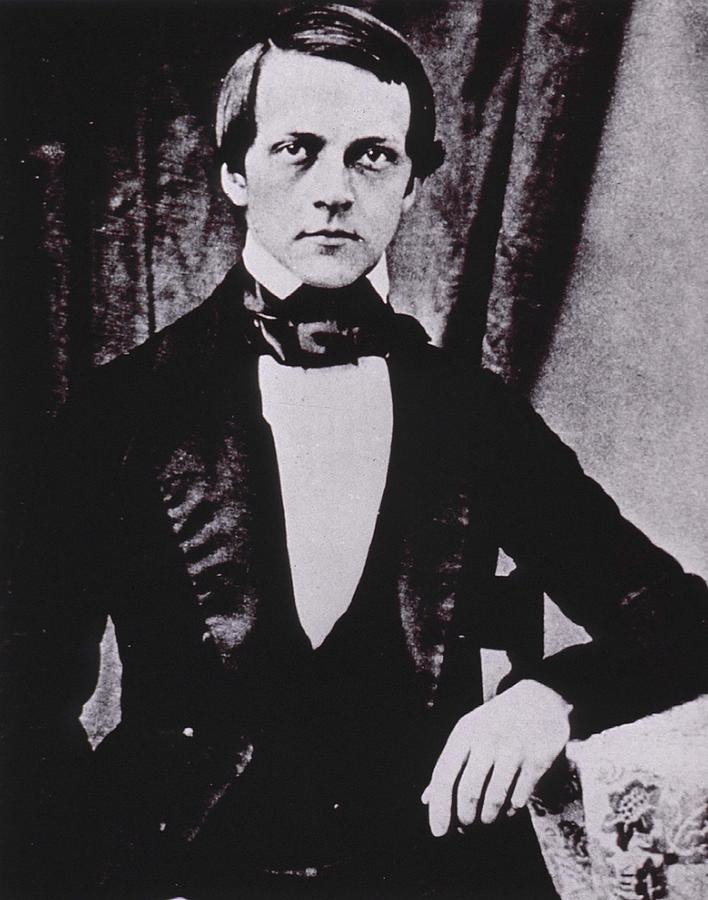

And here a picture when he was younger from around 1850: