In any geometry the concept of duality is important. We know Poincaré duality, dual polyhedra, Alexander duality, duality in projective geometry, Pontryagin duality in harmonic analysis, mirror symmetry in complex/symplectic geometry or dual matroids in combinatorics. Given a bililinar form one has dual vector spaces and spaces for which duality holds like Hilbert spaces are important. For graphs, one can define the dual graph, which has the same vertex set but connects two points if they were not connected in the graph itself. In the previous post we have looked at the problem to extend Alexander duality (which becomes a rather elemenyary duality for simplicial complexes) to connection cohomology. It looks as if one can not port that so easily, unlike Poincare duality which is based on an inner product for forms.

While searching around, we have looked last week at the following definition. Given the connection Laplacian $L(x,y)$ which is defined to be 1, if x and y intersect and 0 if not, one can look at the dual connection Laplacian $\overline{L}(x,y)$ which is defined to be $1$ if $x$ and $y$ do {\bf not} intersect and $L(x,y)=0$ if they do. This dual Laplacian in general does not have an inverse. Here is a new theorem which is a corrollary of the energy theorem (The relation about the determinant below was discovered on Friday, April 13, 2018), first experimentally fooling around with the dual connection matrix, the proof was found shorly after on Saturday, April 14, 2018):

| Theorem: $\det(-\overline{L} L)=1-\chi(G)$, where $\chi(G)$ is the Euler characteristic of the complex $G$. |

Proof: The theorem directly follows from the energy theorem and the unimodularity theorem. If $E$ is the matrix $E(x,y)=1$ and $g=L^{-1}$ the inverse of $L$, then $\overline{L}=E-L$. Now

$$ \det(-\overline{L} L) = \det(-(E-L) L) = \det(-(E-L) g) =\det(-E g + 1) \; . $$

Now look at the eigenvalues of the matrix $E g$. The energy theorem assures that each row adds up the Euler characteristic, so [this is what students always consider to be a “trick” in linear algebra but it actually is a “method” as it appears in any Markov situation], there is an eigenvalue $\chi(G)$ of $Eg$. The other eigenvalues are 0 as all columns are parallel. Now this implies [and also this is a standard “method” we teach in linear algebra to compute determinants] that the eigenvalues of $-Eg+1$ are $1-\chi(G)$ and $1$. The product of the eigenvalues is now $1-\chi(G)$. QED.

[Forgive a jab about math education here, which actually should not belong here, but the above example (featuring a “trick” in linear algebra) illustrates an important point in K-12 education. While it has changed recently, this branch of math education (swapping over to college too unfortunately) used to be very “anti knowledge”, especially in the US. The mantra was “YOU SHALL NOT MEMORIZE STUFF”, “DO NOT LEARN ALGORITHMS”. It was a disaster and we still live the consequences. Some of the brightest minds of our time do not know how to find the solution of a quadratic equation or do not know the definition of trig functions because they were taught “LEARNING BY HEART IS EVIL”. No other science has this devastating philosophy. It is maybe only topped only by general religious or ideological anti science movements . So, why is it important to know algorithms and know definitions, know tricks? Because all of science is made of “knowledge” (it is in the name of it even). The dream of AI of “figuring out things on your own without initial input” did not only fail for machines. It also did not work for education. If “Jenny and Jonny” do not know stuff, then even doing the most basic things become frustrating. It has maybe been sad to see this, but much of modern AI success is “knowledge”. Alexa, Siri, Wolfram Alpha, Cortana, IBM Watson or whatever they are all called, KNOW a lot of stuff or KNOW to look up stuff and they have become so good at it, that they will soon pass the Turing test with flying colors. It has been known since the early AI research that “knowing how to solve a problem is the best and fastest way to solve it” (See this working paper where we worked for one year to teach a machine mathematics (the bot had access to various computer algebra systems and web etc)). Not that teaching to “figure out things is bad, what happens is that

| Creativity can only blossom, if there is a fertile ground of knowledge available already. Knowledge is the soil on which creative plants can grow! |

It will become scary if AI will figure that “meta knowledge” out (they will) and learn and learn. it is an old fear about AI: You certainly have seen the cult movie “Wargames” with Matthew Brotherick where the machine learns.) But it is important that it is also “knowing”, not just being able to “look up”. Of course, nobody should learn the 100×100 multiplication table by heart, that is non-sense, but knowing the 10 x 10 multiplication table is a useful “prototype”, like knowing TIC-TAC-TOE is an important prototype in Game theory. The multiplication table is a fertile ground to discover laws about prime numbers or understand the multiplication table in an abstract group, see what commutativity means etc. A chemist who does not know a wide variety of compounds and mechanisms will not be able to figure out a new one. Knowledge is a complex network and it becomes more powerful if more nodes are available. It can grow faster and discover new nodes faster if it is larger. More knowledge also helps to consolidate things. When learning a language (whether it is a computer language or a real language (I currently try to learn Spanish with Duo Lingo, which does a good job in helping to memorize things). I learned PASCAL in college from a professor who would write down the specifications of the language on the blackboard, I think it is still the programming language, I understand best, maybe because of a bit of a “mindless” approach, but feeding the specifications with insight and examples and general principles, it was quite effective together of course with lots of programming beside that lecture). Knowing category theory in mathematics for example allows to see many special cases as a general phenomenon and many collapse knowledge nodes to one, making the knowledge network more effective. To wrap it up, not realizing the simple fact that “daemonizing knowledge is evil” is in my opinion one major reason why K-12 education in the US does not do so well internationally (as tests show). An other reason of course is the low pay for teachers, especially in K-12. It should at least double. In Switzerland for example the pay is much higher. A third reason is the diversification of knowledge. Computer science and statistics for example have entered earlier in the classrooms (which is good), but that of course takes away time from actual mathematics. Time is bounded and the game of choosing subjects is a constant sum game. If you add more calculus for example, then this hurts geometry. And we see that. Geometry knowledge for example is in general much lower than 20 years ago. But students also know already more calculus than 20 years ago when they come to college. End of rant.]

Well, the story is not over, there is still a lot to discover here. You can experiment yourself. Here are some excercises. Here is one which is much easier than the above theorem: the matrix $E \overline{L}$ has one major eigenvalue and $0$ eigenvalues for the rest. What is geometric significance of the major eigenvalue geometrically?

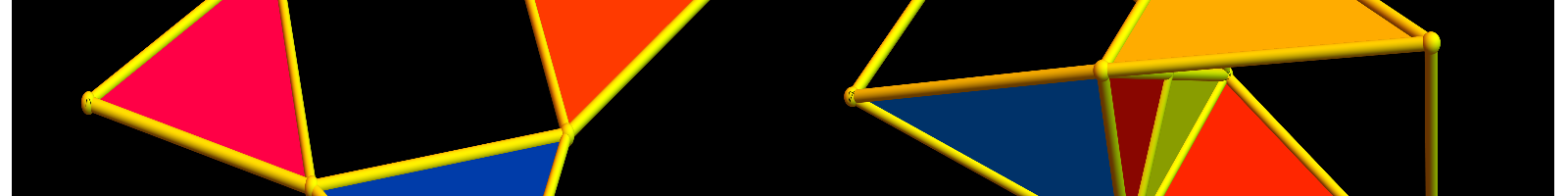

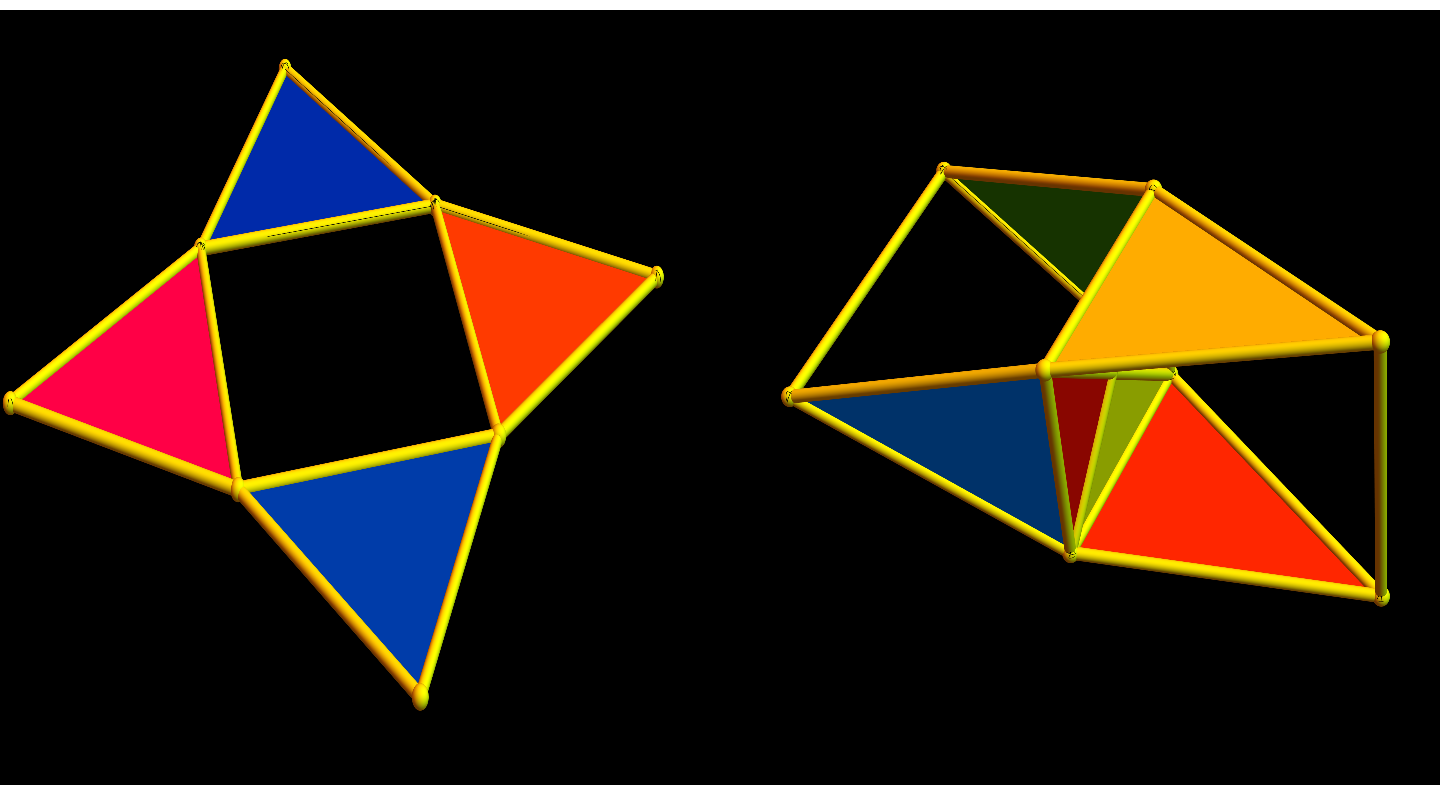

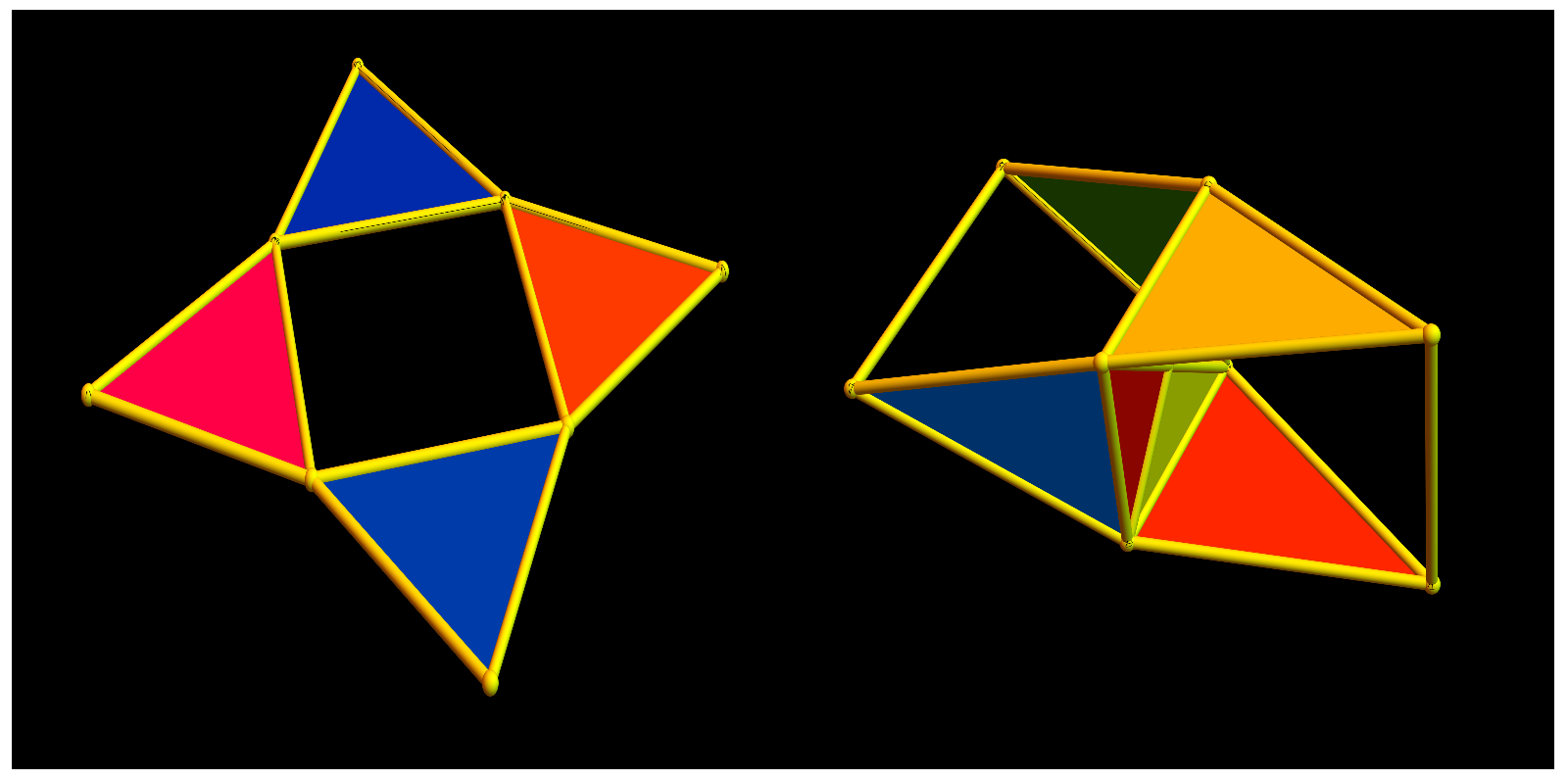

Finally, lets explain the picture in the “icon” for this blog entry: the left graph is the connection graph of the circular graph with 4 elements. This graph has the simplices in that graph as vertices and connects two if they intersect. On the right hand side we have the same vertices, but we connect two simplices, if they do NOT intersect. This is the dual connection graph.