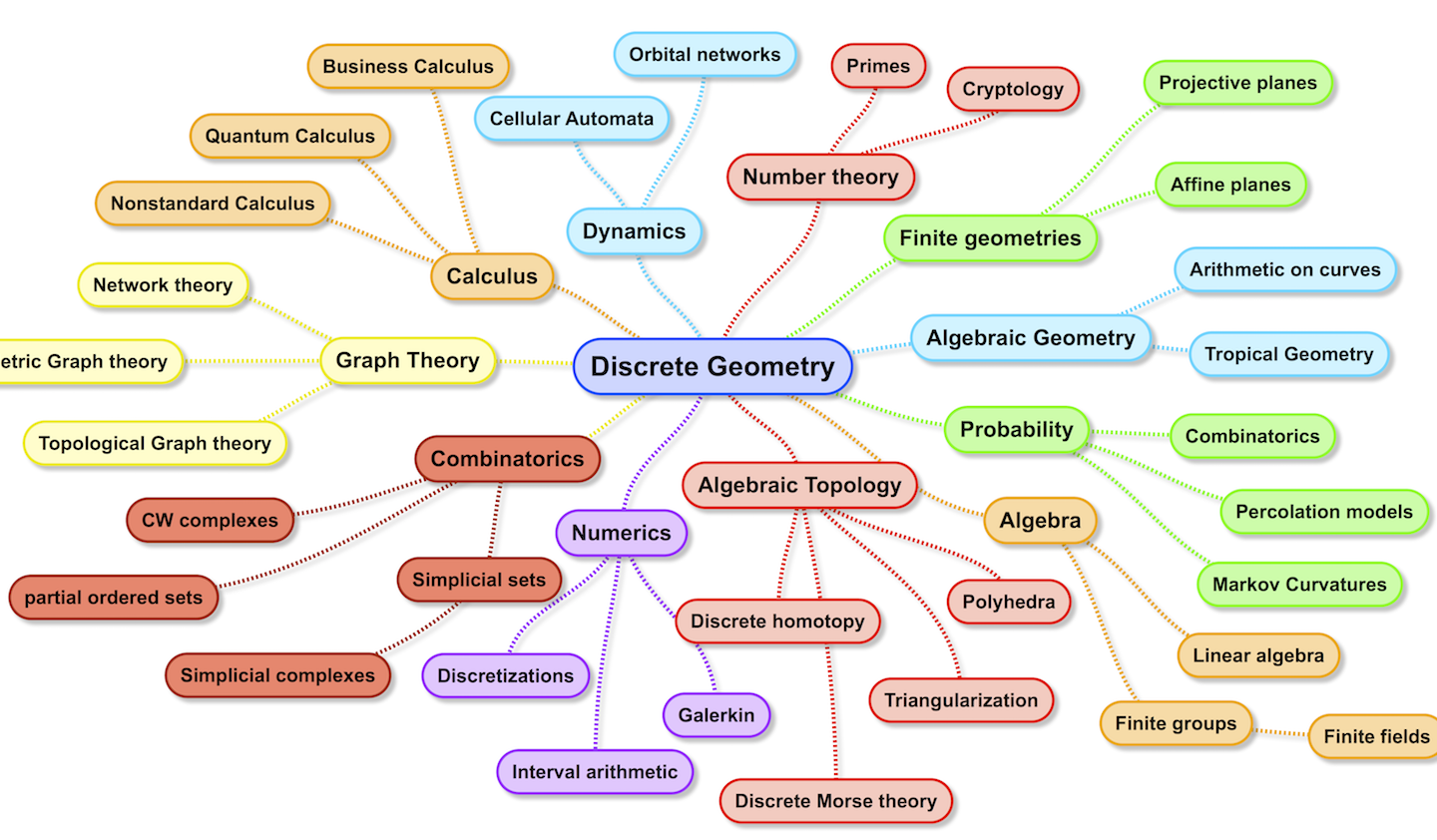

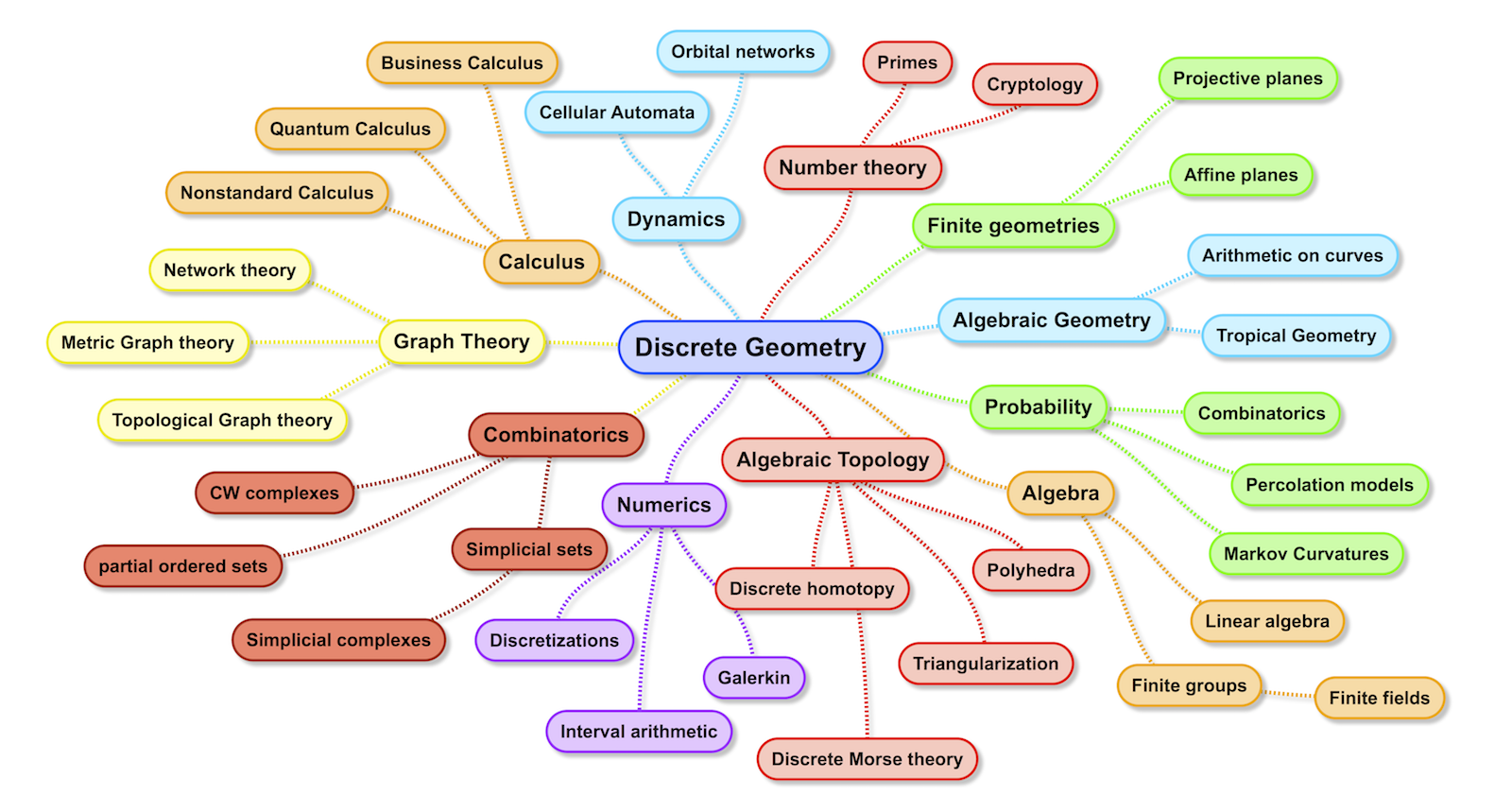

The field of discrete geometry is huge. There is a maze of different flavors of geometry. Here is a spontaneous drawing made with a mind map software:

Why study discrete structures?

One reason is that finite structures are applicable as well as approachable. Finite structures allow experimentation without the need of approximation. We know that everything is exact, at least as long as the structures are small enough to be build in a computer. Not every part of mathematics has this sense of certainty. When measuring quantities like entropy in the ergodic theory of dynamical systems for example, we have no idea how exact the results are, nor do we know whether the results are even qualitatively correct. Smooth ergodic theory is a place where results and mathematics still are very far apart: we measure positive entropy in examples like the double pendulum to the solar system but have not the slightest clue whether these measurements are mathematically true. Even in toy examples like the Chirikov map $T(x,y)=(2x-y+c\sin(x),y)$ on the two-dimensional torus $T^2$ we measure entropy $\geq \log(c/2)$ but do not know whether it is positive for one single $c$.

The Kronecker ideal

Discrete structures revive the Kronecker ideal dreamed up already by the Pythagoreans: everything can be described by integers. Discrete approaches can be refreshing. Modern mathematics contains so many abstract structures that it can be intimidating. Cantorian or Bourbaki revolutions in abstraction have recently been augmented by higher category and derived algebraic geometry avalanches which make Cantor’s approaches look like a children’s game. My own interest in the relations between the discrete and continuum was sparked by various teachers: Erwin Engeler who was a teacher of three basic undergraduate courses of mine (topology, mathematical software and theory of computation) exposed us once to the idea of “finitism” or “ultrafinitism” strains of mathematics. This is more radical than Brouwer’s intuitionism. While Brower’s intuitionism narrows down the logic in proofs, finitism refutes the infinite.Ultrafinitism even discards large integers like $10^{10^{10}}$. Oscar Lanford who was my PhD advisor explored experimentally the boundaries between the continuum and discrete in computations, especially in dynamical systems theory. Lanford was an expert in developing finite interval arithmetic methods, to prove results in analysis. Also this is a finitist approach as a mathematical proof is based on finitely many estimations using integers. Then, there was Ernst Specker, from whom I not only took a two semester introduction to linear algebra but also various logic and set theory courses. Specker told us about Paul Edwin Kustaanheimo’s ideas of using finite fields to do physics and mused in a class about the relation between the continuum and discrete. It was Peter Laeuchli, also a Logician, from whom I not only took my first two semesters of calculus as a freshman, but also a course on forcing and a course on nonstandard analysis. In the later course, Laeuchli demonstrated how many proofs dealing with compact metric spaces collapse in complexity using the new axioms of internal set theory (IST): much of the traditional mathematics is reduced to the mathematics on finite sets. In one of the Lauchli-Specker seminars, I went once through the exercise to prove van der Waerden’s theorem using Fürstenberg’s recurrence theorem, which was – and this was the original contribution – proved using nonstandard analysis. Recurrence results are especially approachable by non-standard analysis as recurrent motion becomes a periodic motion with non-standard period.

Mapping out the discrete

A subject of mathematics can be considered in the discrete if discrete notions approximate the structure. Examples are continued fraction approximations of real numbers, polyhedra approximations of manifolds, polynomial approximations of real analytic functions, finite groups parallel compact Lie groups, finite topologies illustrate examples and counter examples in topology. Probability theory on finite spaces, arithmetic on finite fields, interval arithmetic for computer assisted proofs, numerical schemes to solve partial differential equations, in particular Galerkin approximations and subsequent discretizations of partial differential equations, from tight binding approximations of Schrödinger operators, to Markov chain approaches to stochastic differential equations, statistical mechanical models like percolation or spin glasses or cellular automaton help to understand complicated statistical mechanical situations in nature like crystals, metals, condensates, fluids or gases. A system like a partial differential equation can be discretized in different ways. We can discretize time, leading to coupled map lattices discretize space leading to ordinary differential equations, discretize both leading to maps. One can also discretize the target space. In a translational symmetric setup, this leads to cellular automata if time is discrete or neural networks if time is continuous.

| Time | Space | Target | Model |

| discrete | discrete | discrete | cellular automaton |

| discrete | discrete | continuous | quantum rotor |

| discrete | continuous | continuous | iterating maps |

| discrete | continuous | discrete | diffusion limited aggregation |

| continuous | discrete | discrete | neural networks |

| continuous | discrete | continuous | coupled map lattices |

| continuous | continuous | discrete | mean curvature flow |

| continuous | continuous | continuous | partial differential equation |

In principle, every numerical simulation is a discretisation, as a computer has only finitely many memory states and the time evolution of any system is a path in this finite set. Already in simplest cases like one-dimensional maps on the interval or two-dimensional maps one does not understand the discrete approximations. An other source for discretization is quantization. Quantization has dramatic consequences, like that observables stop being commutative and that previously integrable systems lose this property. What happens under quantization is that arithmetic operations become no more local. A simple example is the quantized line. While the real line is described by the algebra of continuous functions $C(R)$, the quantized line is described by crossed product algebra defined by the translation $\sigma: f(x) \to f(x+\epsilon)$. This algebra is no more commutative as $[\sigma, g] = [g(x+\epsilon)-g(x)] \sigma$. Discretisation also destroys symmetries in general: continuous symmetries given by Lie group actions like rotations are reduced to discrete automorphism groups. The just described non-commutative real line has $Z$ as automorphism group while $R$ had the automorphism group $R$. When discretizing a partial differential equation like a wave equation using a finite grid, the geometry of the discretization matters. If not done properly, discretizations break additional information on the space like symplectic structures. Floating point arithmetic is a rather opaque discretization of the real numbers destroys some axioms of the field of real numbers: distributivity for example does not hold any more. The dynamics of $f(x) = 4x(1-x)$ and $g(x) = 4x-4x^2$ is different already after 70 steps when performed with 16 digit arithmetic on a computer algebra system: in general, as errors double for this system we expect for $n>\log_2(10^n)$ to see the “floating point boundary” when computing with $n$ digits.

The continuum as idealisation

We can think about continuum structures as idealizations. Knowing the discrete approximations contains much more information. We can talk about the number $\pi$ and know its properties but we have difficulty to understand the discrete decimal approximations as we don’t even know whether every digit appears with the same frequency: the normality of $\pi$ is an open mathematical problem in any base $b$. But we do not even have to go to infinite structures to see how clueless we are: it might take a long time for example until we will be able to factor the product $n=pq$ of two 1000 digit prime numbers $p,q$. Numbers like the googolplex $n=10^{10^{100}}$ can not even be written down, as we believe there are less elementary particles in the observable universe than digits of this number. While we know how to factor $n$, we don’t have the mathematics yet to factor $n+1$ if $n$ is the googolplex. No computer could even hold this number in its memory when writing it down in base $10$. The limitations of finite combinatorics are well known: we know that Hamiltonian systems are Poincaré recurrent but even with a few dozen particles in a two chamber box, the probability that all particles will return to one chamber is so small that it is considered impossible as it does not happen in the lifetime of the universe. We do not need a Maxwell demon, we only need to wait long enough to beat the second law of thermodynamics, as it is a statistical law. Even with a large box, we can wait until the density is larger on one side of the room and use this gradient difference to gain energy. The problem is that waiting even for very small chambers would take longer than the life time of the universe so that is impossible. But it is important that it is not a mathematical impossibility but a practical impossibility.

Estimating large numbers has a long tradition. Archimedes estimated the number of sand grains surprisingly well in the “sand reckoner”. He was many orders of magnitude wrong but on the googol scale he was close. Mathematicians like Randall have computed the probability that a can of beer tips or a human tunnels to Mars by quantum fluctuations or that a mouse survives on the sun for an hour. Like the famous monkey typing Shakespeare, all these probabilities are not zero, but they are so small that we can not even imagine their smallness. An other example: from a practical point of view, the harmonic series stays bounded below $100$ even so it diverges mathematically. The Petersburg paradox shows in practice that a player loses, even with a modest $10$ dollar entrance fee. The reality however is that even with a $1000$ dollar entrance fee, the player would win in the long term. Again, the expectation time for overtaking the losses is so large that it is impossible to be achieved in a life time. For the martingale strategy in a casino (double until you win, then leave), the probability to perish is real and was the reason for many disasters. These examples show discrepancies between mathematics and experience. The barrier is not qualitative but quantitative. The numbers are often too big to become relevant.

Flavors

- Some notions in graph theory are directly taken from the continuum. Examples are the geodesic distance, the connectivity components, homotopy or cohomology. Related is the field of topological graph theory which deals with the question of graph embeddings in manifolds. An example of a result in topological graph theory is the Kuratowski theorem which assures that a graph is planar if and only if it does not contain a homeomorphic copy of $K_5$ or $K_{3,3}$. Many notions from the continuum adapt to the discrete. Examples are Brouwer index, degree of a map, Euler curvature, sectional curvature and Ricci curvature. Even more remarkable is that the results in the discrete essentially look the same in the graph case. Metric graph theory deals with finite graphs equipped with a metric. If we want to keep things truly discrete, we can assume that the distances are all rational numbers.

- Discrete Morse theory is a theory for cell complexes. It is close to classical differential geometry. There is a notion of simplicial collapse which corresponds in the Ivachenko approach to homotopy of graphs. There is a more elementary approach which only needs notions of graph theory. In order push over the weak and strong Morse inequalities, there is a notion of Morse function which essentially means that the function defines a Morse filtration $M_c = \{ f \leq c \}$ such that the number of cells changes only by one at “critical points”. A critical point for $f$ is a point, where $S^-(x) = \{ y \in S(x) | f(y) < f(x) \}$ is not contractible. The index of a critical point is $1 -\chi(S^-(x))$. As in the continuum, it is possible that critical points can have zero index. This is the case if the Euler characteristic is $1$ even so the graph is not contractible.

- Discrete computational geometry deals with problems of discrete objects located in manifolds. Examples are polyhedral approximations, triangularizations like Delaunay triangularizations, tiling systems, Voronoi diagrams, rigidity, convex hulls, geodesics, particle collision or coloring problems. Symmetry patterns. Finding the convex hull of a set of points for example is important to get an idea of the shape of the point cloud. The point is not so much combinatorial. The metric, the angles and distances given in Euclidean embeddings still play a role.

- A piece of sound is encoded by finite sequence $(l,r)$ of integer-valued sequences, where $l,r$ are the left and right sound channel. Typically, music is sampled 44’100 times per second and then compressed to a data format like MP3. A picture with $n \times m$ pixels is encoded in the form of three matrices $(r,g,b)$, taking values in the finite byte set $\{0, \dots, 255\}$ of cardinality $2^8$, where $r,g,b$ are $n \times m$ matrices encoding red, green and blue. A movie is a finite sequence of pictures and sound. It is clear already from the fact that a movie can be stored on a finite storage area that everything here is discrete.

- Finite affine and projective planes can be defined axiomatically with even referring to finite fields. An affine plane is a finite set $P$ of points and a finite set $L$ of lines with a geodesy parallel and dimension axiom: for every pair $(x,y)$ of points there is exactly one line through $x,y$, through a line and a point away from the line, there is a unique parallel line. Thirdly there are four not collinear points. For a finite projective plane, the parallel axiom is replaced with the property that any two lines intersect in exactly one point. Finite geometries are difficult to handle: does not know whether the order, the number of points on a line, of a finite plane it seems always to a power of a prime. The smallest $n$ for which one does not know the answer is $n=12$.

- Algebraic geometry over finite fields is part of number theory. A particular branch deals with the arithmetic on elliptic curves. For example, for the field $Z_{11}$, the curve $y^2=x^3+2x+5$ is a subset of the finite set $Z_{11} \times Z_{11}$ which defines a finite Abelian group. Problems which have been studied on Riemann surfaces can naturally be pushed to algebraic curves. Arithmetic number theory has many applications in cryptology, for example because one can use the Abelian Jacobean group associated to a curve for pushing RSA encryption or Diffie-Hellman key exchange algorithms.

- Quantum calculus comes in many flavors. Connes defines quantized calculus through a derivative $df=[F,f]$, where $F:H\to H$ is an involution like the Hilbert transform. For calculus on graphs, space is an arbitrary finite simple graph, it carries an exterior derivative $d$ and so a Dirac operator $D=d+d^*$. Since $u(t) = e^{i D t} u(0)$ is a solution of the wave equation $u” = -D^2 u$. The wave equation is important because it allows to define a notion of geodesic, which is an essential generalization of the concept of line. Given a collection $S$ of $k+1$ subgraphs and an anti-symmetric function $f$ on the set of all $k+1$ subgraphs, then $\sum_{x \in S} df(x) =\sum_{x \in \delta S} f$, where $\delta S$ is the boundary chain. This is Stokes theorem, the fundamental theorem of calculus.

- Robinsons’s nonstandard analysis is based on notions of ultrafilters and extends the real axes to a larger set of numbers which includes nonstandard numbers. An alternative approach called internal set theory extends the ZFC axiom system with new axioms. This allows to deal with infinite and infinitesimal numbers without changing the language much. One can for example write $e^x = (1+x/n)^n$, where “n numerus infinite magnus” (Euler). The topic works also to describe stochastic processes as Nelson has shown. One can deal with infinitesimals also algebraically: just let $h$ be the unit in the space of dual numbers $R[x]/x^2$. Then $f(x+k h) = f(x)+k f'(x) h$. Here is a lemma, which allows to apply graph geometry to compact metric spaces $(X,d)$: Let $V \subset X$ be a finite $\epsilon$ dense set in $X$. Let $E$ be the set of pairs $(x,y)$ such that $d(x,y) < 3\epsilon$. Now look at the finite graph $G=(V,E)$ which depends on $\epsilon>0$. Now narrow down the class of metric spaces in that we ask that for small enough $\epsilon>0$, the graphs are homotopic. This now implies that we can define a cohomology $H^k(X)$ and Euler characteristic for these spaces.

- Regge calculus is a flavor of discrete differential geometry which was developed to make computations in general relativity. It is used in gravitational physics and statistical mechanics and other parts. Unlike graph theory, it includes metric structures like distances. This is done in such a way that if embedded into a space time manifold one gets angle between different edges.

- A spin network is a directed graph with an integer-valued function attached to edges. Some of the mathematics developed first by Penrose is used for loop quantum gravity, a candidate of a theory aiming to describe quantum gravity.

- Cellular automata have been defined by Hedlund as automorphisms of the shift dynamical system. Since every sequence of numbers taking values in a finite set defines a compact metric space, cellular automata can be seen as maps which lead from one system to an other. Cellular automata can be more generally defined as maps on the set of colorings on a graph which commute with the automorphism group of the graph. Wolfram did thirty years ago some pioneer work in that area. There is a related field of lattice gas automata, a numerical scheme for studying fluids.

- A probabilistic approach for curvature on metric spaces has turned out to be interesting also in purely discrete situations.

- Combinatorial topology was seriously developed at the time of Ricci and Poincaré. An example illustrating the power of discretizations is the area of fixed point theorems. Brouwer’s fixed point theorem has been approached early using hexagonal lattices and Sperner’s lemma. Cohomology group computations reduce in the discrete to linear algebra.

- A probability space can be approximated in a finite way by finite sigma algebras. In ergodic theory, the tool of Rholin towers or the speed of periodic approximation. For volume-preserving transformations, there is an approximation theorem of Lax: it is possible to approximate any volume preserving transformation by permutations of finitely many “cubes”.

- There is a relation with integrability. How can one discretize a system which is integrable in the continuum. Integrability can be lost. As more discrete the systems become as more difficult, it is to maintain integrability. A naive discretization of the pendulum for example leads to the nonintegrable Chirikov map. It still can be modified to be integrable however. Integrability can be described geometrically through zero curvature conditions. Any notion of integrability is related to commutativity: lets call a system integrable, if every time invariant measure $\mu$ leads to a measure preserving system $(X,T,\mu)$ which has pure point spectrum. This means that we can conjugate the system to a group translation. Group translations on Abelian groups can be approximated by finite permutations.

- The theory of of networks has become a subject by itself even so a network is simply a graph. The term is mostly used for large graphs like proteins, neural nets, transportation or food networks and of course for computer and social networks.

- The notion of polytopes is a “surprisingly delicate task” (Davedso). “Agreeing on a suitable definition is surprisingly difficult” (Richeson). One often defines it as a convex body with polygonal faces or the convex hull of finitely many points in $R^n$. The perils of a general definition have been pointed out since Poincaré. Topologists started with new definitions and define polyhedra as topological spaces which admit a triangularization by simplicial complexes.