Entropy is the most important functional in probability theory, Euler characteristic is the most important functional in topology. Similarly as the twins Apollo and Artemis displayed above they are closely related.

Introduction

This blog mentions some intriguing analogies between entropy and combinatorial notions. One can push the analogy in an other direction and compare random variables with simplicial complexes, Shannon entropy with Euler characteristic.

Random variables and simplicial complexes seem to be unrelated as they appear in completely different fields. But they become siblings, if we look at them together. A random variable is defined over a probability space and one can assign interesting quantities to random variables and entropy is one of them. Euler characteristic is an interesting quantity in topology. It is a number one can assign to simplicial complexes.

| The entropy functional is the most important quantity in probability theory. | The Euler characteristic functional is the most important quantity in topology. |

Despite the fact that these two functionals appear utterly different at first, there are many parallels, both can be identified by some additive and multiplicative conditions as well as a gauge with a fixed element. Alternatively, one can see each of them as a unique fixed point of a renormalizations map, one for random variables, and one for simplicial complexes. The two quantities are then associated with central limit theorems.

Some history

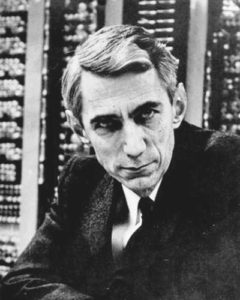

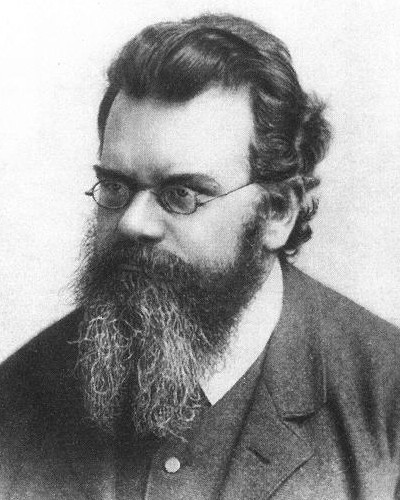

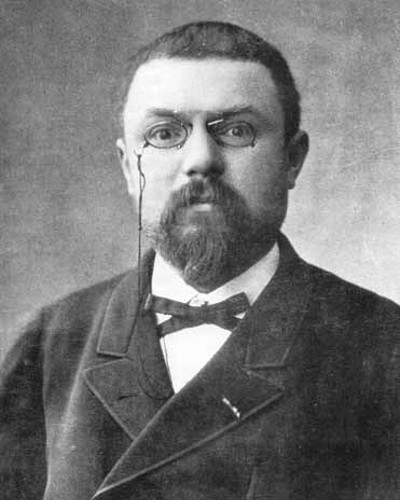

Here are four pioneers. Boltzmann and Shannon for entropy and Euler and Poincaré for characteristic.

|

|

|

|

| Leonhard Euler (1707 – 1783) | Ludwig Boltzmann (1844 – 1906) | Henry Poincaré (1884 – 1912) | Claude Shannon (1916 – 2001) |

Seriously guys! You four need to lighten up!

Boltzmann introduced entropy in 1872 in his kinetic theory of gases. The concept has been around earlier, like in 1850 when Rudolf Clausius stumbled upon it. Euler observed Euler characteristic in 1751. Lets first look at Euler characteristic.

There is always the historical question about first appearance. Already Descartes saw experimentally in 1639 that the Euler formula v-e+f=2 holds for a few Platonic solids. This is an experimental verification and is a first step. Proving a theorem is a completely different matter. [This is paralleled for almost every theorem. For example, Pythagoras was first experimentally seen on Clay tablets, but these are special cases. Seeing a special case is not the same than proving a general theorem.]

And then there is the generality. Euler characteristic has for a long time been a murky notion even in higher dimensions. Casual definitions do not state clearly what the k-dimensional faces are. Now for a Platonic solid like a cube we can by inspection (or Euclidean realization as a brick) see what the faces are but this is not clear for general networks, even when restricting to polyhedra. But the notion of Polyhedron has probably the worst track record in the entire realm of mathematics. Unclear definitions often lead to wrong theorems. In the case of Euler characteristic, it was skillfully explained by Lacatos in his dialogs “Proof and refutation”. The story is also well told in “Euler’s gem”. Branko Gruenbaum also outlined the difficulty well at various places. Gruenbaum wisely restricts his book on polytopes to convex ones, where the ambiguities disappear (a finitist not accepting Euclidean space would today maybe reformulate things using discrete CW complexes). The confusion starts much earlier already in one dimension, with the concept of polygon in school mathematics. (The Wikipedia article does a pretty good job with explaining the difficulties in that case, but less so in the case of polyhyedra). The problem with polyhedra and polytopes is that many different notions exist in the literature. The notion of finite abstract simplicial complex elegantly cuts through the historically grown ambiguities and additionally builds the possibly simplest mathematical construct imaginable.

Fortunately, for finite simplicial complexes, one has not only one of the simplest mathematical structures we know (there is only one axiom!) but also one of the most elegant definitions of the Euler characteristic functional: just super count the simplices. We will see why super counting is much better than counting itself: it will lead to a scale invariant notion and so go over to the continuum limit. The elegance of abstract simplicial complexes is similar as the elegance and beauty of set theoretical topology which completely avoids all the historical balast and confusions about the concept of limit for example. The definition of continuity as the property that the preimage of an open set is an open set not only avoids limits (and is so really in a “calculus without limits = quantum calculus) spirit, it also makes perfectly sense for a strict finitist who believes the concept of infinitiy is a doomsday notion. Indeed, one can look at finite topological spaces, for example on simplicial complexes.

Now lets turn to entropy. Probability theory at the time of Boltzmann was in a terrible state of Woodoo. Only Kolmogorov cleared up the mess by placing the theory firmly on measure theory and giving clear definitions of what random variables or stochastic processes are. So, it is not surprising that the notion of entropy which Boltzmann considered was also not very clear. Shannon defined entropy in a discrete setting in 1948. Today, entropy is defined for discrete probability measures (for which no zero probabilities are possible) as well as absolutely continuous random variables for which the function f(x) log(f(x)) is integrable. This is also called differential entropy. For random variables which have a singular continuous law (the generic case), one has to go through a limiting process but this is always a bit unsatisfactory as we need a definition which is independent of the approximation.

Shannon dealt only with discrete distributions and was so mathematically on safe grounds. Many other notions of entropy have been introduced since, in particular when dealing with dynamical systems, the theories of time evolutions. The topological entropy (Adler, Konheim and McAndrew) and the metric entropy (Kolmogorov,Sinai) are examples. The mathematical frame work and language is surprisingly close to the frame work and language seen in statistical mechanics even so these notions are defined on the category of topological or measure theoretical dynamical systems and not just notions of a random variable, as Boltzmann or Shannon did.

The objects

| A finite random variable is a random variable taking finitely many values. The data are completely determined by a probability measure p on a finite set V. | A finite simplicial complex is a finite set V equipped with a set of non-empty subsets which is invariant under the operation of taking finite non-empty subsets. |

Definitions of entropy were first given by Boltzmann, and in a modern context by Shannon. Euler characteristic was first experimentally defined by Descartes. For two dimensional sphere complexes, the formula v-e+f=2 was proven by Euler. The higher dimensional notions were then developed by Schläfli, Poincaré and later to finite abstract simplicial complexes.

| The entropy functional for a random variable X is defined with P[X=x]=px as H(X) = – ∑x px log(px). where the sum is over all points x for which the value of the probability px is not zero. |

The Euler characteristic functional for a simplicial complex X is defined as χ(G) = ∑x (-1)dim(x) where the sum ranges over simplices x of the complex and where dim(x)=|x|-1 with |x|=cardinality. |

Boltzmann was interested in entropy in a statistical mechanics frame work. He defined entropy as the expectation of the logarithm of Wahrscheinlichkeit W=1/P. Shannon was interested in entropy in an information theory frame work. It turns out that we can assign entropy to data. It can be measured by data compression. High entropy data can not be compressed well, while low entropy data can.

The origins of Euler characteristic started with the study of platonic solids, then more general polyhedra, then polytopes, graphs and finally was defined for simplicial complexes. Low dimensional notions are still entrenched widely even among mathematicians. Historically for example, there has been huge interest in one dimensional objects (curves, Riemann surfaces = 1 dimensional complex curves), where the Euler-Poincare relations express Euler characteristic as b0-b1 with two Betti numbers. For a connected curve this is 1-g, where g is the genus. So, for one dimensional connected objects, the genus plays an important role. It is the number of holes in a Riemann surface. It is no wonder therefore that also in full generality (when not restricting to the one dimensional world), the number

plays an important role. It appeared for example as diagonal Green function elements in the Super Green story which is still in the works.

Question

If one has a functional, it is natural to ask whether it can be singled out from basic principles. This is indeed possible, both for entropy as well as for Euler characteristic. For entropy, this was done already by Shannon. For Euler characteristic, the characterization has been known to combinatorial topologists since about the same time. In both cases we deal with finite structures, finite probability spaces on one side and finite complexes on the other side. To make the comparison more compelling, lets look at the exp entropy ψ(X) = exp(H(X)).

The Cartesian product

The defining property deals in both cases with products. In the case of probability spaces we deal with random variable taking finitely many values. A simplicial complex is a finite set V called base equipped with a set of non-empty subsets with the property that it is invariant under the process of taking non-empty subset.

| The Cartesian product of two random variables is obtained by realizing them as independent. This means that we look at the probability space on which the points are the possible pairs of values (x,y) = (X(w),Y(w)) and where p(x,y) = p(x) p(y). | The Cartesian product of finite abstract simplicial complexes X,Y is defined as a complex on the Cartesian product of the sets V,W of X,Y. First define the graph on X x Y, where two pairs are connected if one contains the other. Then take the Whitney complex of that graph, the set of cliques in the graph. |

| The exponentiated entropy of a product is the product of the entropies of the factors. | The characteristic of a product is the product of the characteristics of the factors. |

Additivity

If X is a random variable, then E[f(X)] is the expectation of f(X). In a finite frame work this is . For example, E[X] = ∑x x P[X=x] is the expectation of X itself.

If G is a simplicial complex, then v(G) is its f-vector. It is a finite vector with vk(G) counting the number of k-dimensional simplices in G. For example, v0 is the number of vertices, zero dimensional simplices in G, v1 is the number of edges, one-dimensional simplices in G etc. The number of facets, the number of highest dimensional simplices in a complex is called its volume.

| A functional φ on random variables is called additive if it is of the form E[f[X]] for some function f. | A functional φ on complexes is called additive if it is of the form F.v(G) for some vector F. |

The valuation property is φ(X+Y) = φ(X) + φ(Y) in the sense that φ(X ∪ Y) + φ (X ∩ Y) = φ(X) + φ(Y). Note that the symmetric difference is not a simplicial complex as simplicial complexes can not be added. (They are a subset of the linear space of all chains defined by the complex.)

This parallels that probability measures do not form a linear space but are part of a larger linear space, the space of signed measures.

More notation

We say a random variable is non-trivial if it is not constant. We say a simplicial complex is non-trivial if it is not the empty complex.

We say a functional on random variables is non-trivial if it is not constant 0. We say a functional on simplicial complexes is non-trivial if it is not constant 0.

We say, a functional on random variables is normalized if it is 1 on the constant random variables. We say a functional on simplicial complexes is normalized if it is 1 on the one-point simplicial complex.

We say a functional on random variables is multiplicative if it is multiplicative with respect to the Cartesian product of random variables (pairs of IID random variables). We say a functional on simplicial complexes is multiplicative if it is multiplicative with respect to the Cartesian product of simplicial complexes.

Characterization of entropy and characteristic.

| The exponential of Shannon entropy is an example of a normalized, non-trivial, additive and multiplicative functional on random variables. | The Euler characteristic is an example of a normalized, non-trivial, additive and multiplicative functional on simplicial complexes. |

More interesting is that these functionals are unique:

| Shannon Theorem (1948). The exp entropy ψ is the only non-trivial additive and multiplicative normalized functional on random variables. | Mayer Theorem (1942). The Euler characteristic χ is the only non-trivial additive and multiplicative normalized functional on simplicial complexes. |

The Shannon theorem is essentially Theorem 2 in his 1948 article. The earliest reference for the result on Euler characteristic is W. Mayer, W, “A new homology theory. {I}, {II}, “Ann. of Math. (2),43, 1942. Of course, the notions in the last 70 years have considerably changed.

| Here is a proof sketch of Shannon’s theorem: the product property implies f(t)= k log(t). The normalization implies k=1. | Here is a proof sketch of Mayer’s theorem: by the discrete Hadwiger theorem, a valuation is of the form <X,v(G)> where v(G) is the f-vector of G. The product of G with 1 is the Barycentric refinement. Since v(G_1) = A v(G) for an explicit operator A which has only one non-zero eigenvector (1,-1,1,-1 …), the Euler characteristic is unique. |

Measures

| A random variable X defines a law μ defined as the derivative μ = F’ of the cumulative distribution function F(t) = P[X ≤ t] | A finite abstract simplicial complex G has as the law the density of states, the derivative of the integrated density of states of the (Hodge) Laplacian of G. |

While probability spaces can look quite complicated and be large, the law is a measure on the real line. The entropy is completely determined by the law. The Euler characteristic is in some sense also determined by the law but only if we look at operators on discrete forms. Euler characteristic is the super trace of 1 and equivalently by McKean Singer the super trace of exp(-t L), where L is the Hodge Laplacian.

Renormalization maps

| Given a random variable X, define a new variable X1 = X+X’ normalized so that the variance is 1. | Given a simplicial complex X, define a new simplicial complex X1 as the Barycentric refinement of X. |

Both renormalizations can be iterated. The entropy increases under the renormalization map on variables and Euler characteristic is invariant under the Barycentric refinement map.

Central limit theorems

There is also a parallel on universality:

| When iterating the renormalisation map on random variables, it converges to a universal fixed point, the Gaussian random variable. | When iterating the Barycentric renormalization map, the law converges to a universal fixed point, which depends only on the dimension of the complex. |

The renormalization story changes if in the target space of the random variable, the non-compact real line is replaced by a compact abelian Lie group. Then the limiting measure (of course) is the Haar measure. The proof is the same as in the central limit theorem case, as the story trivializes after taking the Fourier transform. I have a small chapter in this text. Statisticians are interested in circular data.

For the Barycentric renormaliation story, see this paper (and a earlier announcement).

Complexity

Both the concept of entropy as well as the concept of Euler characteristic is related to fundamental enigmas. The first one is the question of “time”. Why is there an arrow of time? The second is related to the NP complexity problem as it is close to an NP complete problem.

| Is entropy preserved under fundamental physical phenomena? | Can we compute the Euler characteristic effectively? |

The difficulty with entropy is fundamental as long as we do not know what physical laws are approximations and which physical laws are fundamental. The second law of thermodynamics for example is not a fundamental law which can be proven, but a consequence of too large time scales, properties of matter and time preventing Maxwell deamons.

For Euler characteristic the fundamental difficulty is that we know that counting simplices in graphs is difficult. For graphs of small dimension, there are Morse theoretical fast computations of Euler characteristic through Poincare-Hopf theorems but these computations become hard if graphs have high dimension.

Confusions

All known basic fundamental physical theories (classical mechanics, quantum mechanics relativity) are reversible and so preserve entropy, there is an obvious clash between the scales. Poincaré recurrence for even very small systems would take too long to be observed in the time scales accessible to us. There is an arrow of time due to the fact that we can not keep track of all information (impossibility of the Laplace daemon). There are limitations to matter preventing a Maxwell daemons. All this is hardly of any mystery any more for mathematicians who know about dynamical systems but physical models having to deal with reasonable time scales and experiments have led often to confusions. The clash is so strong that some researchers like Prigogine have proposed to look at irreversible fundamental laws of nature.

Similarly, there have been confusions about Euler characteristic. The story was best told by Lacatos in the book “proofs and refutations”. The story is amusing since during the development of understanding Euler characteristic, there were theorems which proved to be wrong. Again and again. We know now that similarly as with entropy this came from murky and unprecise definitions. What is nice about finite probability spaces or finite abstract simplicial complexes that everything is crystal clear, pure mathematics and no ideological or cultural ballast needs to be carried around. Already physics has much more trouble with that.

| Is there an “arrow of time”? Is entropy fundamentally preserved? | Are there different “complexity classes”? (Church thesis) |

The question of entropy occupies also large scale physisists who look at the mathematics of singularities in Pseudo Riemannian geometry. The question of computational complexity is murky too. In principle, quantum computing could smash through complexity barriers and allow us for example to compute Euler characteristic for very large networks.

[Side remark: while at UT Austin, I visited some famous seminars (mostly out of curiosity). One was the seminar of Weinberg,and an other by Prigogine. The Weinberg seminars were regular and went a couple of times just to see what is happening. They took place in regular class rooms. The Prigogine talk was completely different and resembled more like a religious service. The room was fancy, there was tea served and the speaker was lecturing like a pastor before a devoted public. He had just published his book “The end of Certainty”. It was not a book reading event however.]

Anyway, the book illustrates that confusions are great if one does not stay within pure mathematics.

But mathematicians can also leave the realm of mathematics and speculate. It just has to be stated as such. Speculation is very cheap as one could just throw out random conjectures, random models. By probability, one will be right for some.

I personally have opinions about the above “big questions” but it hardly matters what one believes: if needed to guess I would state that entropy is fundamentally preserved by physical processes but that one has to remember that as there are mathematical facts which are un-observable phenomena (winning at the Petersburg casino for example with 100 dollars entrance fee is never observed even so it is a mathematical fact that we will win eventually as the winning expectation ∑n P[win 2n dollars] 2n = ∑n 2-n 2n is infinity). An other example is Poincaré recurrence in a macroscopic box with a few hundred balls. We never, ever will be able to see the recurrence as the probability is just too low. But Poincaré recurrence is one of the simplest mathematical theorems in ergodic theory and only uses the pigeon hole principle: given an automorphism T of a probability space X and a set A of positive area, then it is not possible that all iterates Tn(A) are disjoint. The problem with Prigogine is that as a chemist and taking experiments seriously as one should, he concludes from the fact that we don’t observe any recurrence, conclude that on a fundamental level the physical laws have to be changed. But even if Prigogine would be right, he would be right in the same way than Democritus or Lucretius were right about atomic theory, just by pure luck. There was no reason at the time of Lucretius to believe in atoms. There is no reason to assume on a fundamental level that due to an observed arrow of time, we need to change the fundamental laws. There are many mathematical theories (Martingale theory in probability theory is one, non-equilibrium statistical mechanics, turbulence theory within partial differential equations or then ergodic theory within dynamical systems theory), which allow very well to deal with the arrow of time and explain it within a frame work. Perfectly and by simple means like for example that typically in an infinite dimensional Hamiltonian system, the system will tend to go up in the frequency range. When looking at a Vlasov gas for example which is a perfectly reversible infinite dimensional Hamiltonian system, the density of the gas will tend to converge, a piston within the system will settle down as we observe. here is a 1 page handout for a class from 2005 or this article with Evan Reed, explaining this a bit.

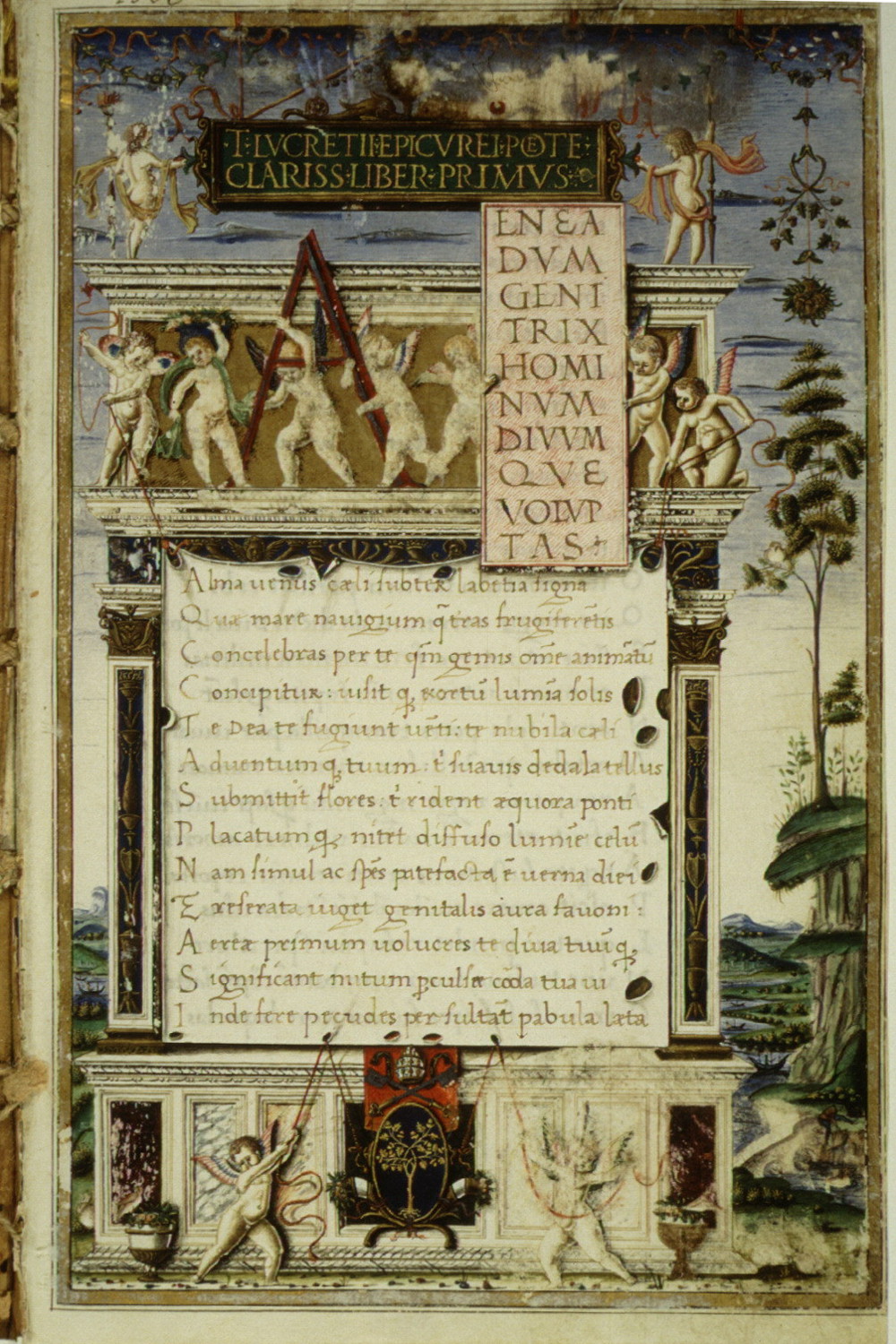

De Rerum Natura, by LucretiusThe end of Certainty, by Prigogine

De Rerum Natura, by LucretiusThe end of Certainty, by Prigogine

On the side of possible quantum complexity classes, I would tend to believe that the Church thesis will remain valid and that similarly as in classical mechanics, the limitations of chaos and quantum mechanics will kick in when working with larger number of qubits. Already classically, we not say for example what the one millionth iterate of the Ulam map T(x)=4x(1-x) is when we start with is. In order to answer this numerically, we would have to be able to process numbers with

digits. In order to do the computation analytically, we would have to store expressions of this size. Both are impossible because there are not enough elementary particles in the universe to write the objects down. In the case of quantum computing, we have not seen these barriers yet, but experimentally observe entanglement problems which look like rounding error problems in classical computing. Any way, it would be very surprising if P=NP was true (even on a quantum level). When pressed to answer whether we ever will be able to compute Euler characteristic polynomially fast, then my guess is yes, maybe because it is so special. We know already that it can be computed polynomially fast on large parts of Erdoes-Renyi probability spaces of graphs but it could also be that it is fundamentally equivalent to the clique counting problem and NP complete. But we don’t know that yet. The question of whether the computation of Euler characteristic is NP complete is an example of a good scientific question because there is a chance that we can answer it within a fixed computational frame work (like Turing machines or quantum computers). The question about whether on a fundamental level entropy is preserved is also a good question within a fixed theory (like classical mechanics or general relativity).

Variational problems

| Maximizing entropy explains some distributions like Gaussian, geometric or uniform distribution. | Extremizing Euler characteristic is a combinatorial variational problem on finite simplicial complexes. |

It has been known since a long time (Gibbs?) that the Gaussian distribution maximizes the entropy among all distributions on the real line, that geometric distribution maximizes the entropy among all distributions on the half line that the uniform distribution maximizes the entropy among all distributions on an interval. For finite probability spaces, the entropy maximizing problem is boring as the uniform distribution again is maximal. The variational problem becomes there more interesting when adding an energy functional. Maximizing free energy = entropy plus free energy, then leads to the Gibbs distribution.

The problem of extremizing Euler characteristic is largely unstudied. I have looked at it a few times, like here or on even dimensional graphs. The problem is very interesting on geometric 4 dimensional graphs, as we can write the Euler characteristic as a sum of local Euler curvature quantities which are averages over Poincaré-Hopf indices, which are again Euler characteristics of two-dimensional random surfaces and so again a sum of two dimensional curvatures. In some sense therefore, it is an average over all possible sectional curvatures at a point. In the continuum, this is the scalar curvature, when integrated is the Hilbert action producing the Einstein equations in the continuum. So, in some sense we can look at Euler characteristic as a quantized Hilbert action. It is called quantized because it takes integer values only.

|

|

| Apollo, son of Zeus and Leto, god of music, Image source | Artemis, daughter of Zeus and Leto, goddess of nature Image source. |